For the work we want to show at the Crit, we've decided to focus on AI-generated art, and if we can make art that appears to be generated but isn't. We've explored a lot about AI hallucinations and the trouble that brings, and one other thing we've noticed is, conversely, how perfect it is. The composition and lighting is always beautiful, with a subtle cinematic glow or haze over everything — which I think, aside from when it hallucinates, is the biggest giveaway of AI-generated art. It is difficult to get such perfect natural lighting and composition, especially in the time it takes to press 'generate' in Dal-E or similar. I looked at this a bit more when I described photos I'd taken as prompts to various generative AI models. We have decided to take two or three prompts, generate images we think are reproducible, and then try and create them physically, using absolutely no AI (which also means no generative fill or subject selection in Photoshop / Final Cut / Premier Pro). These are the three images we initially decided on:

These are all objectively pleasing images, with lovely composition and lighting, but in a studio setting, and at the right time of day, should be reproducible.

Unfortunately, we ultimately only used these as a starting point for what we would finally produce, as we quickly ran out of time to book studios and had to shoot in the Blue Shed instead. He had planned to book a studio and take some photographs for the first two images, but on Monday Dec 2nd, Tess, Anne and Peter decided to just get it done while I was on a job, so we had the images done and could focus on giving them the 'AI look'. Unfortunately, they did not have these three images and so had to remember as close as they could, what they looked like. In the end, the only photo that we decided we would use was this one of Peter holding a coffee cup, which Anne took:

I then took this in to Photoshop to try and 'AI-ify' it. What we wanted was for an audience to be unsure of whether what we present is human-made or AI-generated. As such, I removed Peter's body, exaggerated the shadow on the wall, removed the pattern and logo on the cup and replaced it with one that looked more like how AI produces text (using the word 'coffee' and various other random letters, and wind effects and distortion), filled it with coffee, removed the fluff on his sleeve, color-corrected his skin, extended his thumb, and added a sixth finger. The final step was to try and add a slight glow to everything, which I think was the least successful out of everything I've done. Ultimately, I think that, if you didn't know it was Peter's hands, you would definitely think this was AI generated — and at the crit, the people who spoke said they were certain that the coffee cup image was AI and not human-made, citing his hands and fingers in particular.

We next looked in to AI deepfakes; a particularly horrifying one is on Hitler and Churchill singing 'Video Killed the Radio Star', which I implore you to watch at your own peril:

We decided to look at different ways to replicate this. On the face of it, this seemed more challenging that photo manipulation, as the way the software works to create deepfakes has many more processes involved and would require hours upon hours of work. We did, however, find some online AI models that could move the face, eyes and mouth of photographs to 'bring them to life'.

Tess used After Effects to manipulate photographs we took of each other, to make them blink and then to vey subtly move, and have subtle mouth movements. This was really effective, especially for the blinking, and definitely felt like they belonged in the uncanny valley.

Meanwhile, I tried a different approach. I took videos of Tess and Anne's faces while their eyes moved and they smiled, and then overlayed this on to the photographs of them. This felt equally uncanny. This method worked better with Anne than with Tess. For Tess, I was closer to her and had a wide focal length, so her features appear larger than they do in the photo; it's a bit like seeing the start and end of a dolly zoom at the same time. I was further away when I recorded Ann, with a longer focal length, so her features are more akin to those in the original photographs. During the crit, Eliana pointed out that the faces were moving, but that they weren't breathing. I hadn't even considered this before, but once I noticed I couldn't unsee it and how eerie it looked.

The final way we thought about doing this was to physically build it in Cinema 4D. Peter looked at various ways to do this, including scanning and importing Anne to Cinema 4D, and then I tried removing her eyes and building eyes and eyelids in to it that would blink. The issues came with the quality of the scan: there was far too much 3D noise around her, and the scan wan't nearly detailed enough to look even remotely real — still, it was an interesting thing to try, and it certainly looks uncanny.

What was more effective was Peter animating the scan of himself. I took a scan of him in the library, which he imported in to Cinema4D and cleaned up a lot, separating out the legs, and the feet from the ground, and clearing up a lot of the noise, before adding a skeleton to it so that he could animate it. Here are a few example of his renders:

My personal favorite is the one of his wax figure dancing — but we felt this didn't quite fit the atmosphere we were going for, so we used the clown walk instead in the end.

The last thing we needed was another photograph — so I took this photo of Anne holding her phone, and asked Chat GTP to generate an image using a description of the photo as a prompt.

I took the photograph into Photoshop and tried to match it with the AI version as closely as possible: the AI one looks like the screen is on the back, so I tried to add a glow to the back of the phone, covering it with a mat color rather than the frosted backless in the original photo, and I extended her finger to look slightly too long. I also gave her skin a subtle glow. Again, though, the lighting in the AI one is so perfect that, if shown both, it would likely be obvious which is which.

The Crit

The above elements — only the ones we made, not anything AI-generated — is what we decided to show for the crit. We decided to projection map it in the blue shed, with a sound track that Anne made of us speaking, meant to sound like an AI deepfaked voice, by recording us saying the words individually, and cutting it together. This, along with a piece of music we found online, created a decidedly creepy atmosphere to aid in the feeling of abnormality and uncertainty around the images.

This is what our set-up looked like:

humAIn

This project is an immersive installation that premeditates the experience of encountering art perceived to be generated by artificial intelligence. Through the use of various mediums, it creates works that viewers will believe are AI-produced, but are, in fact, carefully crafted by humans to mimic AI output. The focus is not on questioning the authenticity or originality of the work, but rather on observing and analyzing the reactions of people when they encounter what they assume is AI-created art. By doing so, the project explores the emotional and intellectual responses to the idea of AI in the creative process, shedding light on how society perceives and engages with machine-generated content.

Below is a Timelapse of our set-up, and a video of it in action.

Below those are two screenshots, one of MadMapper, and one of the projector output.

After the Crit

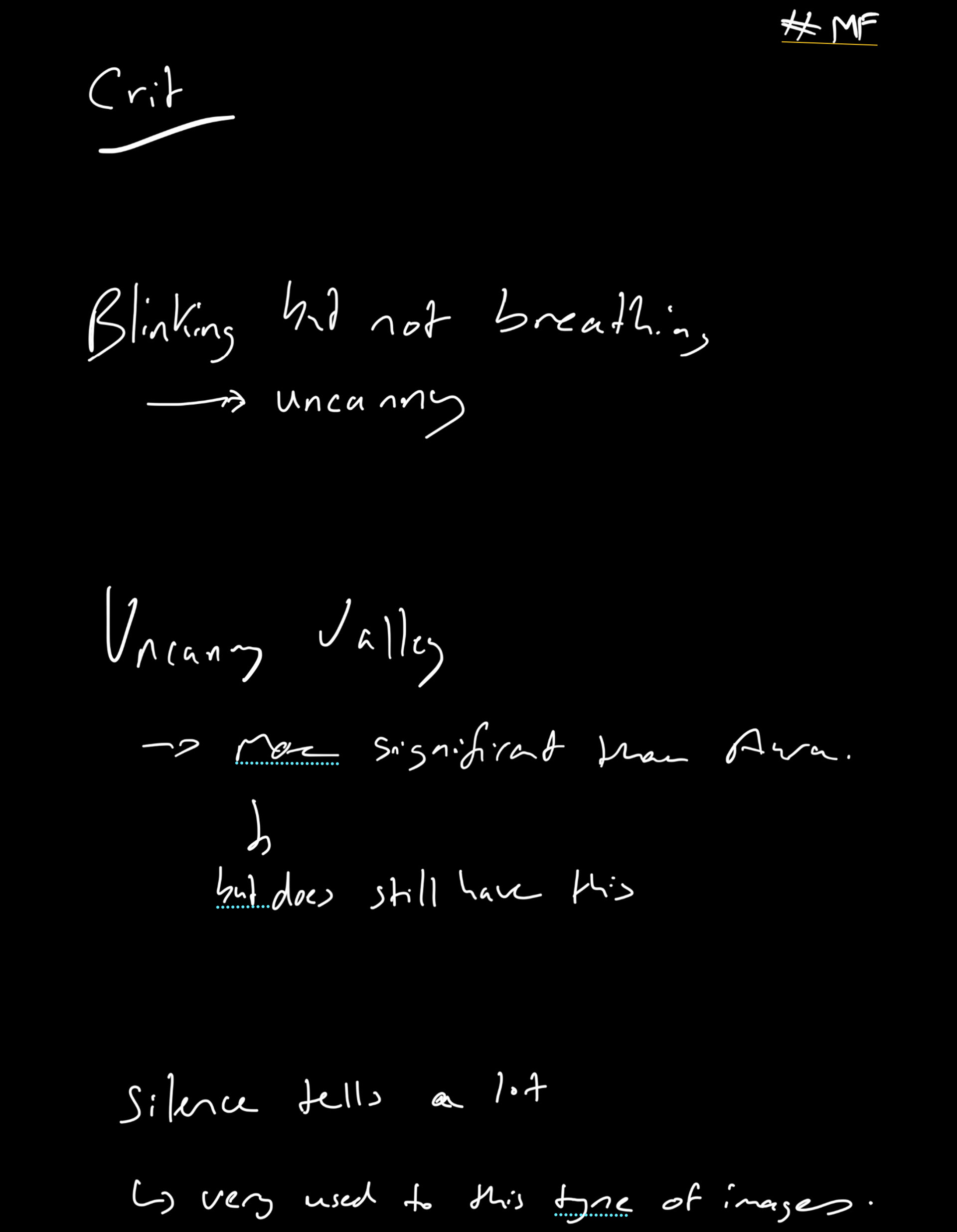

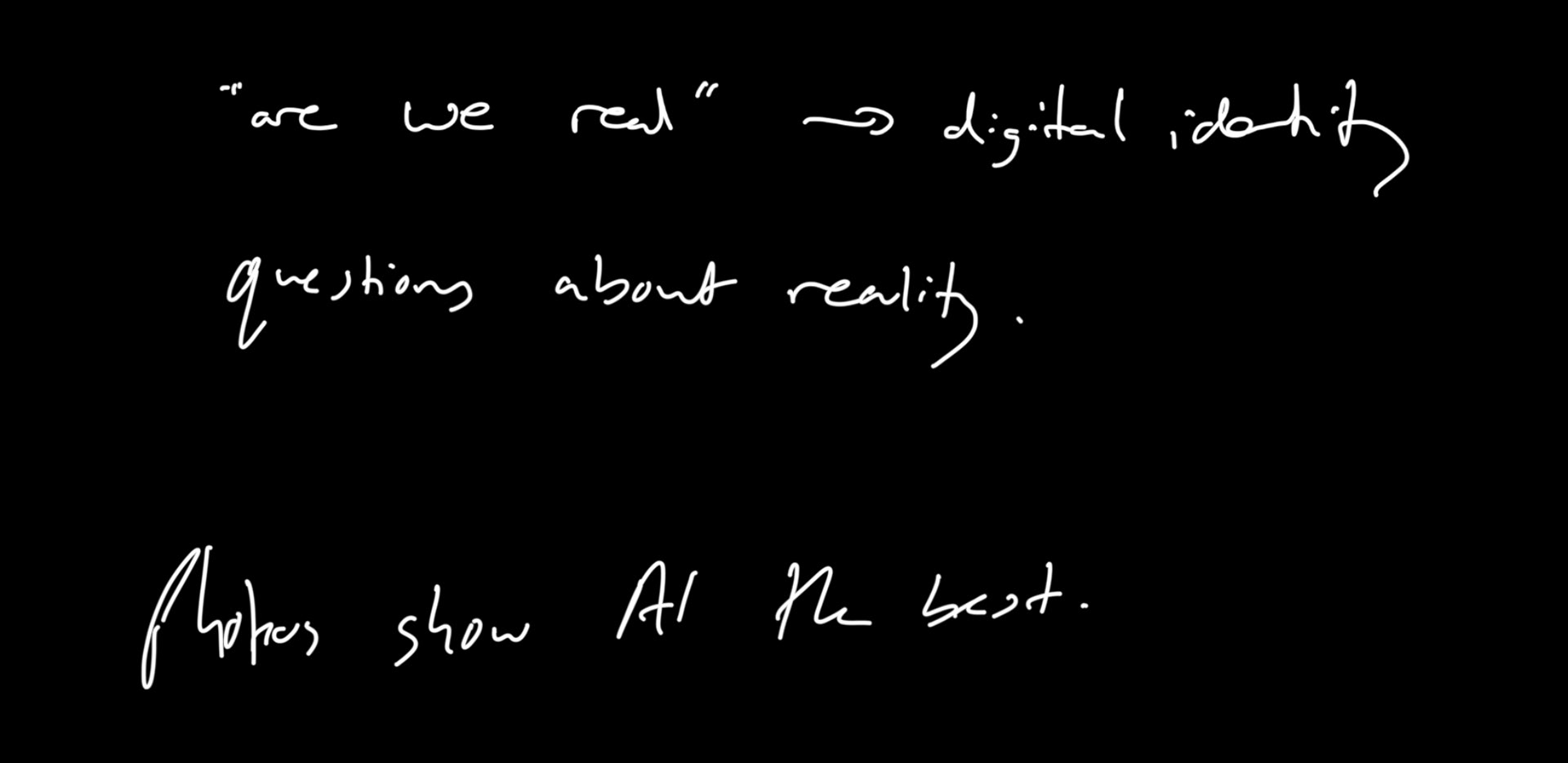

These are my notes from the crit:

I think this was extremely interesting. I certainly think that we are all very used to this kind of imagery now, and so we have a sort of desensitisation to it. On the whole, people thought that some of the imagery was human-generated, and some was AI. The photos were the most convincing, but a few people mentioned that it would've been more difficult to tell with the videos if it had have been people they didn't all know — not ourselves.

Overall, I think our project went well and had a good reception. I think AI is a powerful tool, and one that we should both be wary of, and be prepared to embrace. I think the current issue is that it's going the wrong way — I want it to help me, speed up my workflow, enhance my creative process; not replace it and do it for me. There were a few seminars and conversations that I haven't mentioned in my blog, largely because I didn't want to get in to the intricacies and nuances behind other people's opinions that, to me, seem totally alien — but I completely second everything Anne said in her documentation.

To say that 'AI doesn't take away jobs, it gave me one, because I used AI to generate frames for an animation that I couldn't do on my own' is absurd — that isn't it giving you a job, that's it taking a job away from an animator that had the capabilities to actually animate. Ultimately, it will come down to money versus output. If it is cheaper to produce content from AI, then a large amount of people will do that. And, unlike with the creation of Photoshop or photography or steam trains, it doesn't create workflow for anyone, all it does is allow parsimonious company executives to steamroll through a Chat GTP prompt list and not have to pay our a penny for any design work or photography or original creative thought. Photography didn't remove artists, and photoshop didn't remove airbrushes — and AI won't remove creatives, I am sure. But it certainly will decrease the opportunities for them, on an unprecedented scale.

As I said in my essay last year: "There is a significant difference between advancements that aid our development: medicinal and scientific technology than could cure our cancers and send us through the stars; uplifting us and helping us strive forwards; and that which only aids in our stupefaction, congealing us down into the human in WALL-E. This is not the fault of technology, though, but rather how we use it — if we choose laziness and lethargy over active engagement in technology, then lazy and lethargic is what we will become. [...] Maybe the ghost in the machine was us all along, projecting what we hope will exist into what never can; maybe we are the equivalent of the weavers who wanted to destroy the first looms. If the essence of technology is nothing technological, then it is our intervention that stops it being inert and creates it into whatever it will become — until such time when it can do this by itself. Maybe we are simply the sex organs of the machine world, and this is our next evolutionary step. Will androids dream of electric sheep? Or will they dream of the machine utopia? Perhaps Heidegger was ahead of his time, and the title of his essay should instead have been ‘The Question? Concerning technology.’"